What are Recurrent Neural Networks (RNNs)?

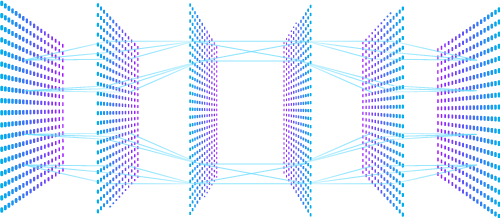

Recurrent Neural Networks (RNNs) are a type of deep neural network that are commonly used for sequence-to-sequence tasks, such as language translation, speech recognition, and time series prediction. Unlike traditional feedforward neural networks, which process inputs independently, RNNs have a memory component that allows them to maintain information about previous inputs, making them particularly suited for tasks involving sequential data.

The key feature of an RNN is its ability to maintain an internal state, or “hidden” state, which is updated at each time step based on the current input and the previous hidden state. The updated hidden state is then used to make a prediction for the current time step, and the process is repeated for the next time step. This allows the network to capture the temporal dependencies between the inputs in a sequence.

Here are the main components of an RNN:

Input: At each time step, an RNN takes an input vector and the previous hidden state as input.

Hidden state: The hidden state is a vector of values that represent the network’s memory. It is updated at each time step based on the current input and the previous hidden state.

Activation function: The activation function is applied to the input and hidden state to generate the new hidden state. The most commonly used activation functions for RNNs are the hyperbolic tangent (tanh) and the rectified linear unit (ReLU).

Output: At each time step, the RNN generates an output based on the current hidden state. The output can be a single value, or a vector of values, depending on the task.

RNNs can be trained using a process called backpropagation through time (BPTT), which is a modification of the backpropagation algorithm used to train feedforward neural networks. BPTT involves computing the gradient of the loss function with respect to the network parameters at each time step, and then using these gradients to update the parameters using an optimization algorithm, such as stochastic gradient descent (SGD).

One major challenge with RNNs is the vanishing gradient problem, which occurs when the gradients become too small to update the network parameters effectively, especially for long sequences. To address this, several variants of RNNs have been proposed, such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU), which use gating mechanisms to selectively update the hidden state and avoid the vanishing gradient problem.