What Are cGANs?

Conditional generative adversarial networks (cGANs) are a type of deep learning model used in AI art generation that are based on the generative adversarial network (GAN) architecture. The key difference between GANs and cGANs is that cGANs are conditioned on additional input data, such as a label or a source image, that is used to guide the generation of the output image.

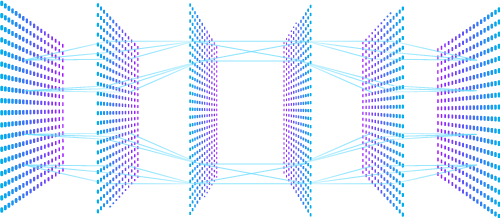

In cGANs, the generator network takes both a noise vector and an additional input as input, and produces an output image that is conditioned on the additional input. For example, the additional input might be a label indicating the class of the image (such as “dog” or “cat”), or a source image that the generator network should use as a reference. The discriminator network takes both the real and the generated images, as well as the additional input data, and tries to classify them as real or fake. The generator network is trained to produce images that fool the discriminator network into thinking they are real.

The use of additional input data in cGANs allows for more precise control over the generated images. For example, it can be used to generate images of a specific style or genre, or to generate images that match a particular reference image. It can also be used to generate images that combine elements from different input images, as in the pix2pix technique.

cGANs have been used in a variety of applications in AI art generation, including style transfer, where the goal is to transfer the style of one image onto another, and image synthesis, where the goal is to generate new images that match a particular style or genre. They have also been used in scientific and engineering applications, such as generating simulated images of microscopic or astronomical data.